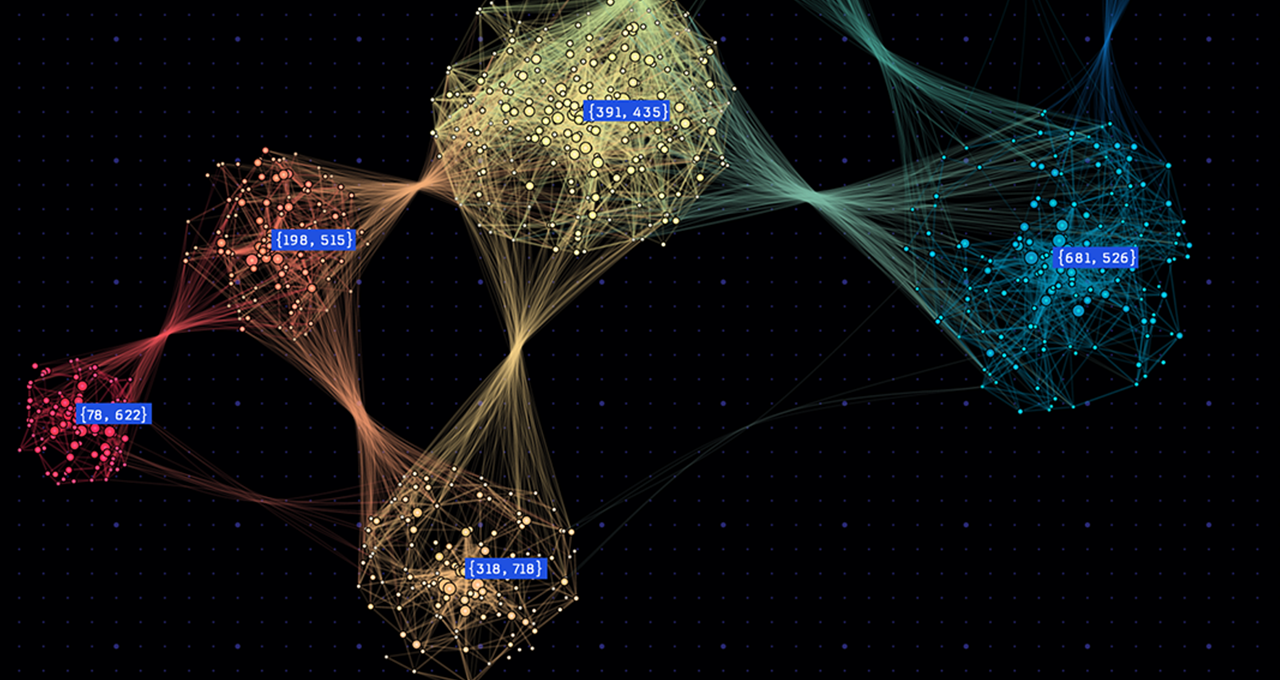

NVIDIA achieved a Graph500 benchmark result of 410 trillion traversed edges per second using a GPU-based system hosted on CoreWeave’s AI cloud platform. The run used 8,192 H100 GPUs to process a large-scale graph containing trillions of vertices and edges. The performance was reached with fewer nodes than comparable systems, highlighting differences in efficiency and system design. NVIDIA’s approach relied on its integrated compute, networking, and software stack, including CUDA, Spectrum-X networking, and an active messaging library.

The benchmark reflects the system’s ability to handle sparse and irregular data, which is common in applications such as social networks, financial systems, and cybersecurity. Graph500 measures how quickly a system can traverse these structures using breadth-first search, indicating how efficiently it manages communication between nodes and memory bandwidth.

NVIDIA reworked graph processing by enabling GPU-to-GPU active messaging through InfiniBand GPUDirect Async and NVSHMEM. This design removes the CPU from the communication path and allows graph data to be processed where it resides. The result demonstrates an approach that may extend to high-performance computing workloads that involve irregular data patterns, including scientific fields that use sparse data structures.

Leave a comment