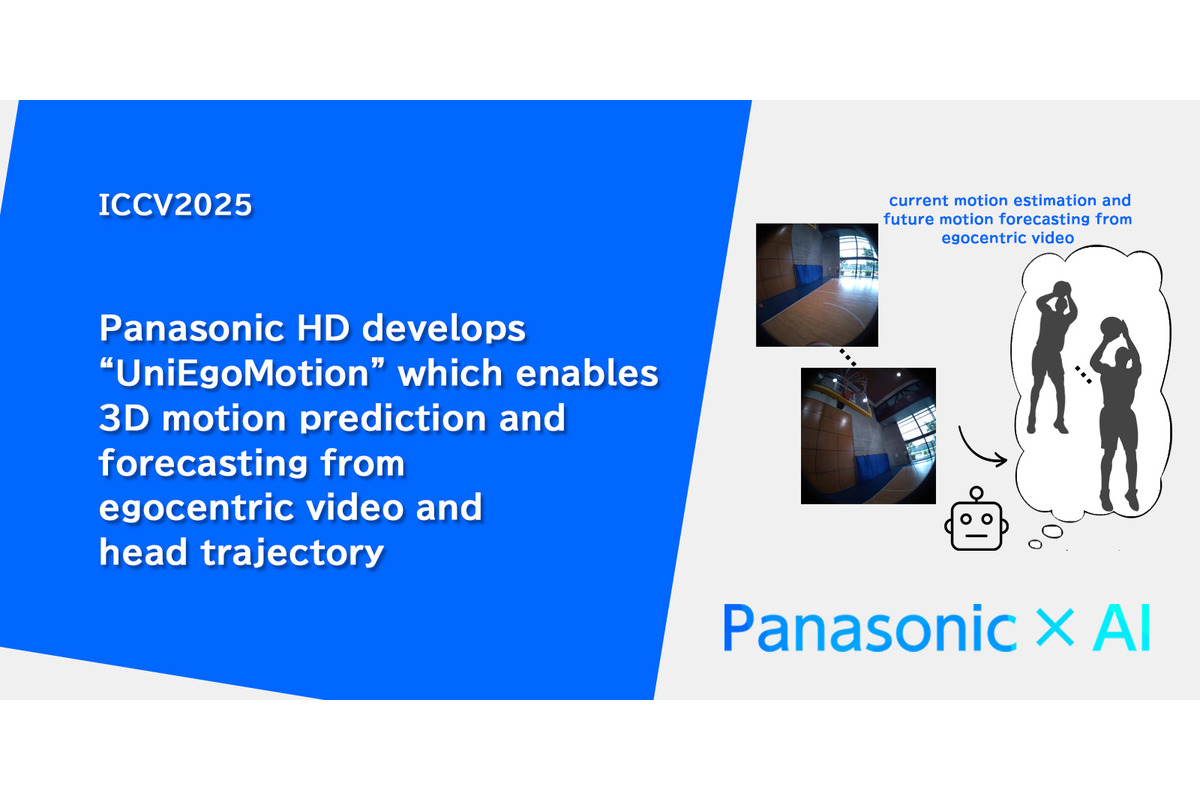

Panasonic Holdings and Panasonic R&D Company of America, in collaboration with Stanford University researchers, have developed “UniEgoMotion”, a groundbreaking AI model that enables 3D motion estimation, forecasting, and generation from egocentric video and head trajectory alone without relying on third-person views or scene data.

Presented at ICCV 2025 in Hawaii, this innovative technology addresses long-standing challenges in understanding human motion from first-person perspectives, especially with the rise of wearable cameras and smart glasses. UniEgoMotion integrates a motion diffusion model with a head-centric representation and a DINOv2-based image encoder to deliver high-accuracy motion reconstruction and forecasting. Its masking strategy allows a single model to handle multiple tasks, including motion generation.

In testing, UniEgoMotion outperformed existing methods in pose accuracy and motion realism. The technology opens new opportunities across VR/AR, industrial assistance, sports training, and healthcare rehabilitation, enabling real-time motion analysis and predictive modeling.

Panasonic plans to further develop and commercialize this AI capability to enhance efficiency, safety, and personalization in real-world environments — reinforcing its commitment to advancing human-centric AI innovations.

Leave a comment